How did Facebook intercept their competitor's encrypted mobile app traffic?

A technical investigation into information uncovered in a class action lawsuit that Facebook had intercepted encrypted traffic from user's devices running the Onavo Protect app in order to gain competitive insights.

28th July 2024 - 👋Hello Hackernews!

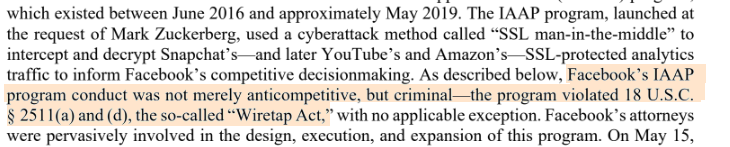

There is a current class action lawsuit against Meta in which court documents note* that the the company may have breached the Wiretap Act. The analysis made in this post is based on content court documents and reverse engineering sections of archived Onavo Protect app packages for Android.

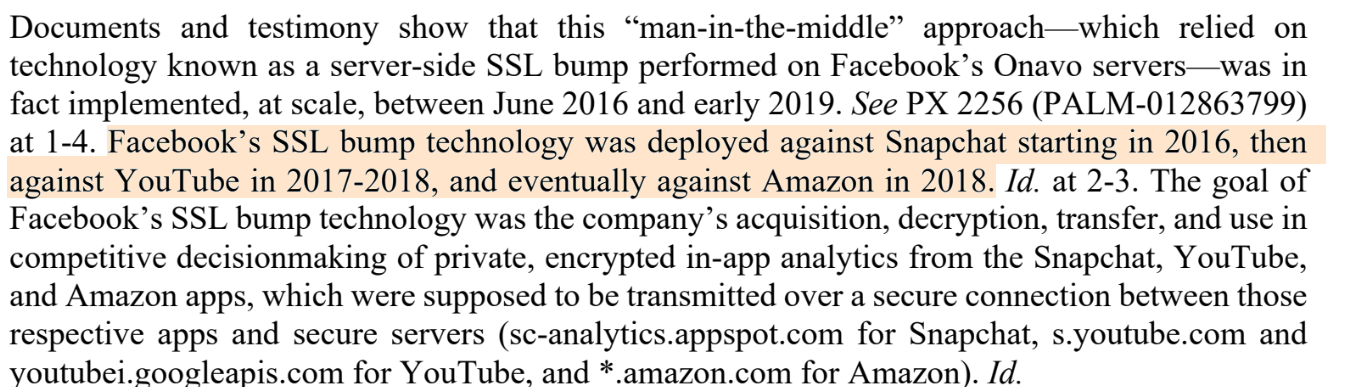

It is said that Facebook intercepted user's encrypted HTTPS traffic by using what would be considered the a MITM attack. Facebook called this technique "ssl bump", appropriately named after the transparent proxy feature in the Squid caching proxy software which was used to (allegedly) decrypt specific Snapchat, YouTube and Amazon domain(s). It is suggested to read a recent TechCrunch article for additional background on the case.

*A HN user clarifies:

"This is not a wiretapping case. It's an antitrust case; the claims are all for violations of the Sherman Act. Plaintiffs' attorneys _incidentally_ found evidence during discovery that Facebook may have breached the Wiretap Act."

Technical Summary

- Onavo Protect Android app, which had over 10 million Android installations, contained code to prompt the user to install a CA (certificate authority) certificate issued by "Facebook Research" in the user trust store of the device. This certificate was required for Facebook to decrypt TLS traffic.

- Some versions of the older app contain the Facebook Research CA certs as embedded assets in the distributed app from 2016. One cert is valid until 2027. Data discovery content in court documents state certificates are "generated on the server and sent to the device".

- Soon after the "ssl bump" feature was deployed in the Protect app, a newer version of Android was released that included improved security controls that would render this method unusable on devices with the newer operating system.

- A review of an old Snapchat app shows that it's analytics domain did not employ certificate pinning, meaning that MITM / "ssl bumping" would have worked as described.

- In addition to the core functionality of gathering other app's usage statistics through abusing a permission granted by the user, there also appears to be other functionality to obtain questionable sensitive data, such as the subscriber IMSI.

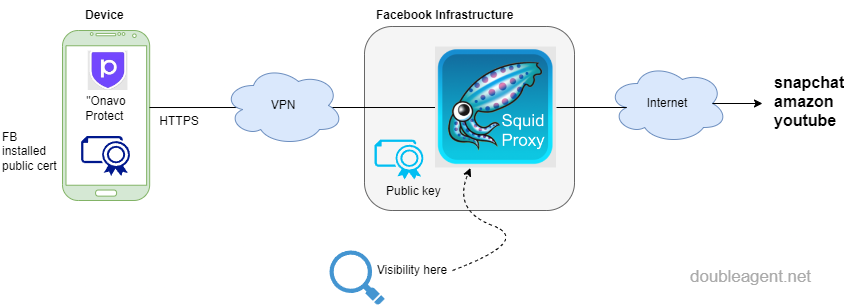

The setup most likely would have looked something the following diagram:

Here we have a trusted cert installed on the device, all device traffic going over a VPN to Facebook controlled infrastructure, traffic redirected into a Squid caching proxy setup as a transparent proxy with the 'ssl bump' feature configured. We know from the documents that various domains belonging to Snapchat, Amazon and Youtube were of interest. It's not known if any other user traffic was intercepted, or just proxied on. This type of information we can't obtain from looking at the archived Onavo Protect apps, rather for the time being, we have to rely on the content in the court documents made available to the public.

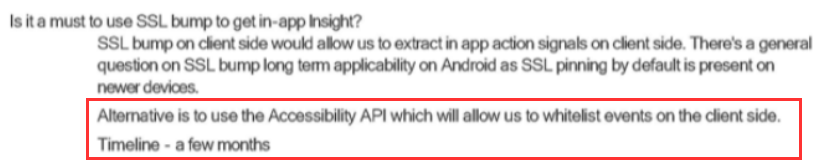

Over time the success of their strategy to employ a transparent TLS proxy was diminishing due to improved security controls in Android. Additionally certificate pinning adoption was said to be an issue. As an alterative, Facebook were considering using the Accessibility API as an alternative.

This is what Google has to say about using the accessibility features on their operating system:

"only services that are designed to help people with disabilities access their device or otherwise overcome challenges stemming from their disabilities are eligible to declare that they are accessibility tools."

It's somewhat telling of a company that would consider abusing features designed to support people with disabilities for a competitive advantage. Generally, Android accessibility functionality misuse is attributed to malicious applications such as banking malware.

Motivation

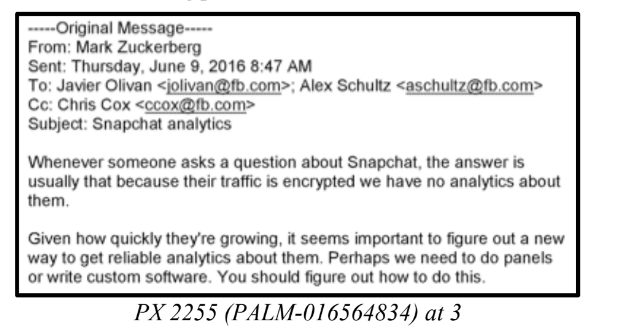

Mark Zuckerberg states the need for "reliable analytics" on Snapchat:

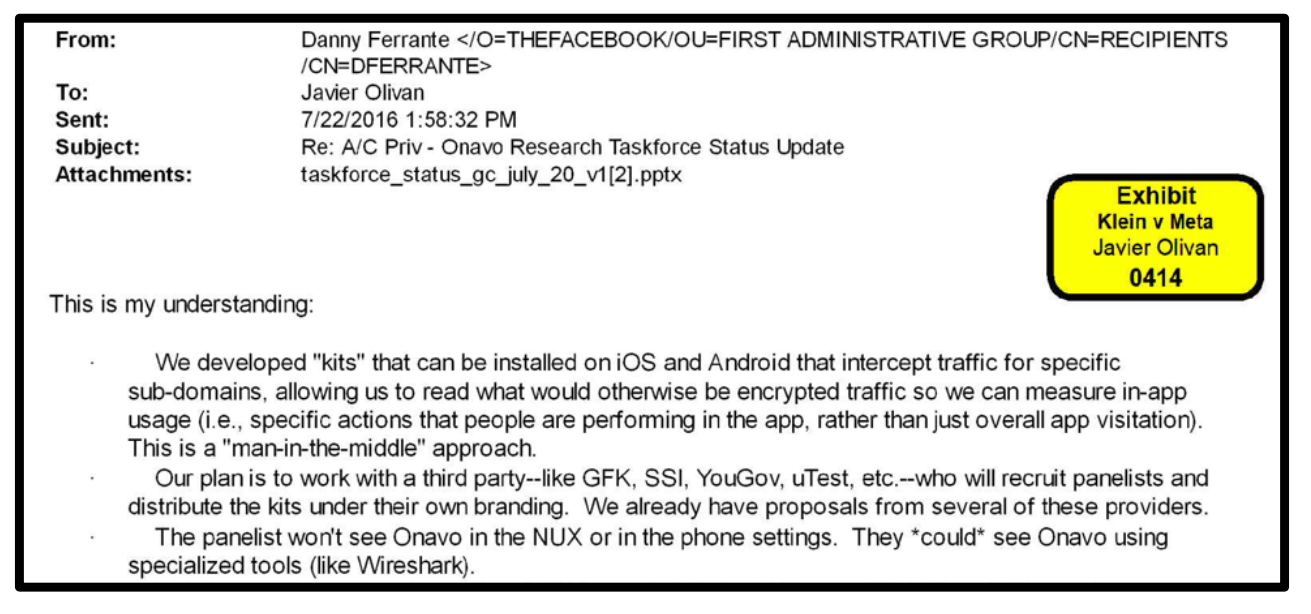

The solution? "Kits that can be installed on iOS and Android that intercept traffic for specific sub-domains":

My take on the above is that in addition to utilizing the Onavo Protect VPN app to intercept traffic for specific domains, there was an intention to rebrand the core technology and having it distributed in other applications. Facebook had acquired Onavo for approximately $120M USD in 2013 and needed to put this technology in good use. That price point should give a clear indication on the value they placed on the ability to gain competitor intelligence from people's phones and tablets.

Prior research on the iOS version notes that the Onavo VPN app was collecting some usage telemetry from iPhones. On Android we can see the app was pulling much more fine grained statistics from their user's devices by utilizing permissions granted under the pretext of showing the user app data usage (we will see how this looked in an embedded video below). But that was not enough, Facebook wanted to take this one step further and intercept encrypted traffic towards specific competitor's analytics domains in order to obtain data on the "in-app actions".

All they would need to do is to get the user to install a custom certificate into the user's phone's trust store (and be on specific Android releases).

In 2023, two subsidiaries of Facebook was ordered to pay a total of $20M by the Australian Federal Court for "engaging in conduct liable to mislead in breach of the Australian Consumer Law", according to the ACCC.

Facebook had shutdown Onavo in 2019 after an investigation revealed they had been paying teenagers to use the app to track them. Also that year, Apple went as far as to revoke Facebook's developer program certificates, sending a clear message.

Despite the apps being taken offline, we are able to find old archived versions which enabled the technical insights offered in this post.

Technical analysis

Websites and applications on end user devices trust remote websites or servers over HTTPS/TLS due to public certificates that are stored in the device's trust store. These "certificate authority" certs are the "trust anchor" that applications rely on to verify they communicating with the intended party. These certificates are generally distributed and stored within the operating system. By adding your own self-signed certificate to the appropriate trust store, it is often possible to intercept encrypted TLS traffic. Corporations may also do this as a means of inspecting outbound traffic from employee's devices. Security testers may also do this on their own devices. There are legitimate reasons for doing this. The question here is if what Facebook did was legitimate, meaning, was it legal or not.

HTTPS was designed to give users an expectation of privacy and security. Decrypting HTTPS tunnels without user consent or knowledge may violate ethical norms and may be illegal in your jurisdiction.

Unlike iOS on the iPhone, Google has made numerous changes to make it extremely difficult to install a CA cert that will be trusted by most applications on the phone. In Android 11, released in September 2020, Google had completely blocked the mechanism which the app used to prompt a user to install the cert and no application would trust any certificate in the user store by default.

While that would be the case, as we will see later, at the time, the Snapchat app did not implement cert pinning for it's analytics domain. This would likely hold true for the other app domains. So it appears Facebook had leveraged off this technical limitation / oversight by it's competitors.

So today, technically speaking, is is simply is not possible to do what Facebook had done back in 2016 to 2019. But it worked - so how did they do it? Fortunately, at least with Android and the Play store ecosystem, we are able to often go back in time and sometimes dig up old Android app packages.

The first thing is to install the Onavo app on a test handset to see how users would interact with the app. Despite the VPN connectivity not working and the actual backend service being down, we do get a glimpse on how the application coerces the user into accepting multiple permissions:

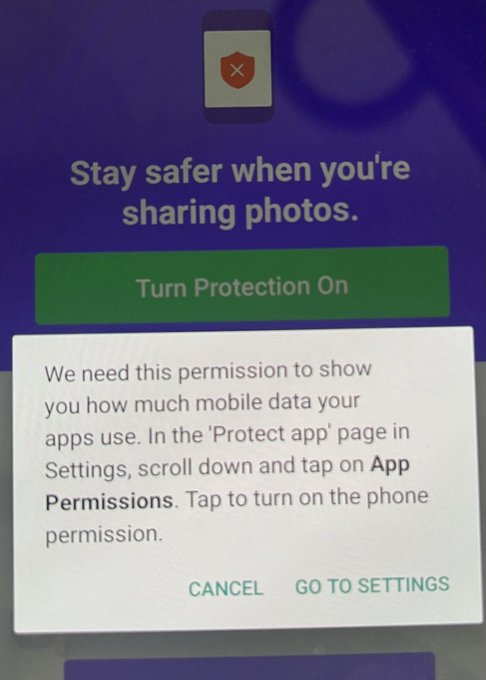

What we see here under pretext of providing the user 'protection', two particular permissions are of concern:

- Display over other apps

- Access past and deleted app usage

"We need this permission to show you how much mobile data your apps use."

What they didn't explain is that this feature is not so much for the benefit of the user who installed the app, but actually Onavo/Facebook. And this type of information is valuable, to the tune of $120M (what FB paid in the acquisition).

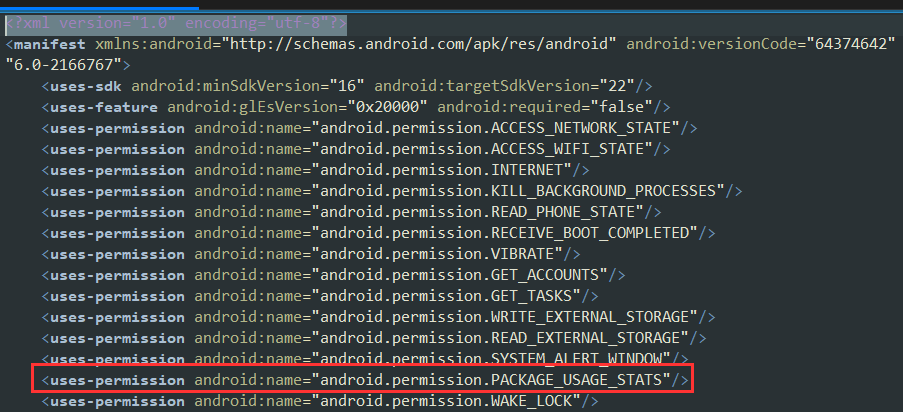

The Android manifest includes the uses-permission directive android.permission.PACKAGE_USAGE_STATS which is what we are agreeing to in the screenshot above:

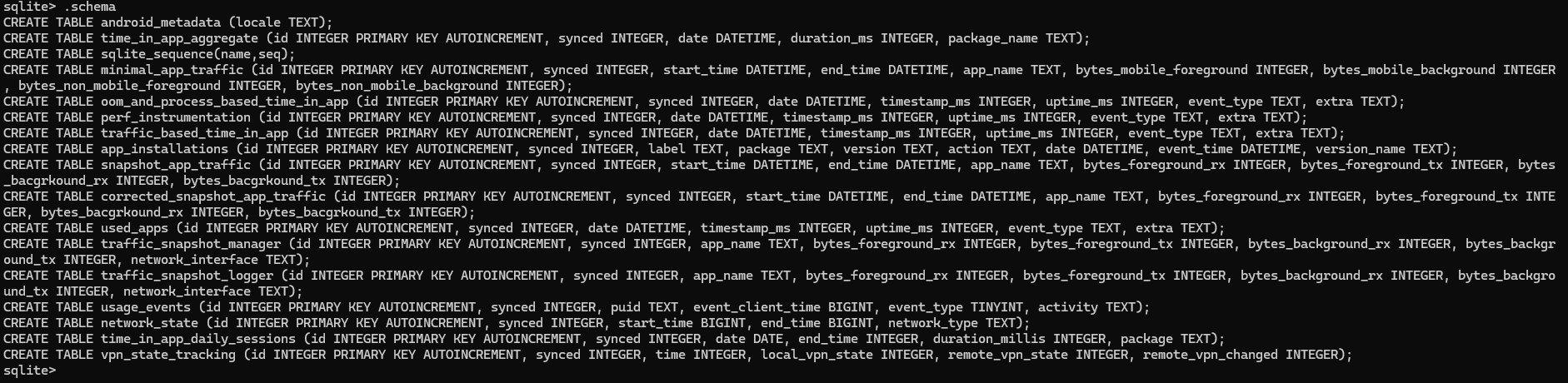

Continuing with the "application stats" feature (assumed to be the original core functionality), we can dump the schema of the local database on the handset to get an idea on exactly what it was collecting on the device itself:

Mostly it seems they could just obtain statistics on in app usage of other applications and of course the network traffic usage for the apps. It's still somewhat high level statistics and clearly not enough granularity for what Mark was after, implied by one of the emails in the court documents. Intercepting the actual encrypted traffic towards the analytics domains of various competitors on the other hand, would do the trick. And to do this, Facebook would have to get a CA certificate somehow on the device.

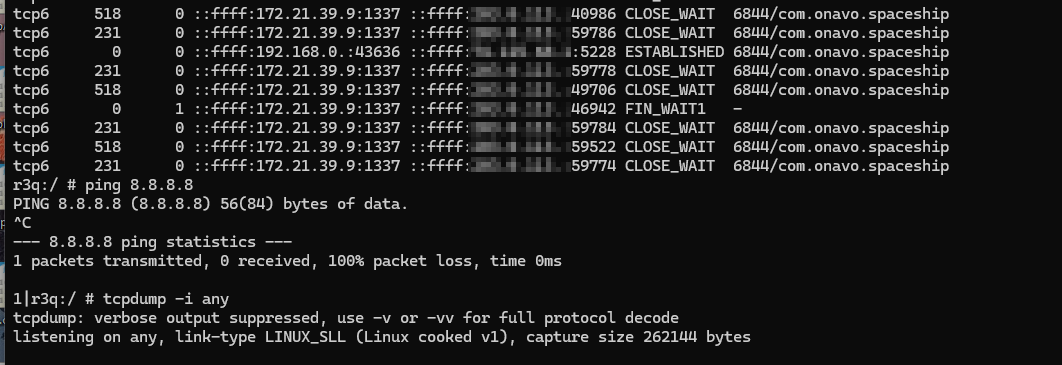

But we don't see any prompt to install any certificate. This is because the VPN did not successfully connect to the remote service, which appears to be precondition. Time or interest permitting, I may go back to figure out how to trigger the certificate installation prompt.

Onto the CA certificates

Decompiling the app, we do see the functionality is there. In the following image, the method highlighted calls KeyChain.createInstallIntent() to install a certificate. Here a popup would appear asking the user for permission, with the name "Facebook Research"

KeyChain.createInstallIntent() stopped working in Android 7 (Nougat). A user would have to manually install the certificate. It would no longer be possible to have Facebook's CA cert installed directly in the app.

Another notable change in Android 7 - According to the Android documentation (emphasis mine):

By default, secure connections (using protocols like TLS and HTTPS) from all apps trust the pre-installed system CAs, and apps targeting Android 6.0 (API level 23) and lower also trust the user-added CA store by default

In other words, it appears other apps would have trusted certs in the user store from Android Marshmallow (Android 6) and below, but from Android 7, released in August 22, 2016, they would no longer be trusted at all by other applications, unless due to a security configuration in the app's manifest file.

Another improvement to Android in version 7 was that it was made impossible to install certificates into the system store by any means except by fully rooting the device.

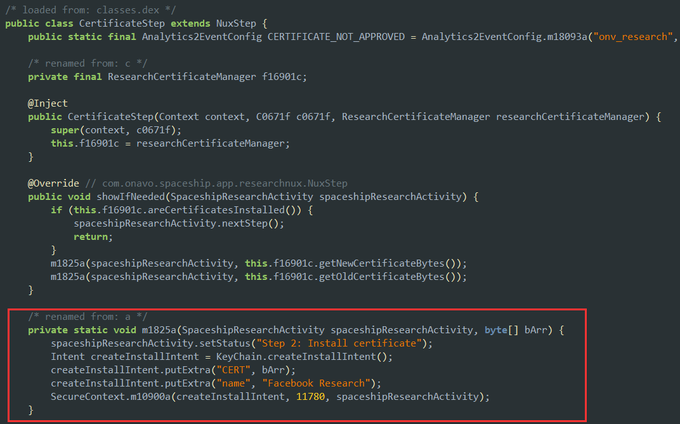

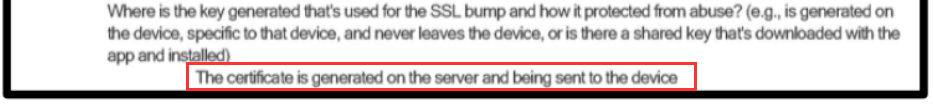

Regardless, the functionality remained in both the older version and newer, all the way to the last published app in 2019. The actual MITM certificate was removed in 2017. Detail in the court documents may offer plausible explanation:

Where is the key generated that's used for the SSL bump and how it protected from abuse? (e.g., is generated on the device, specific to that device, and never leaves the device, or is there a shared key that's downloaded with the app and installed)

The certificate is generated on the server and being sent to the device

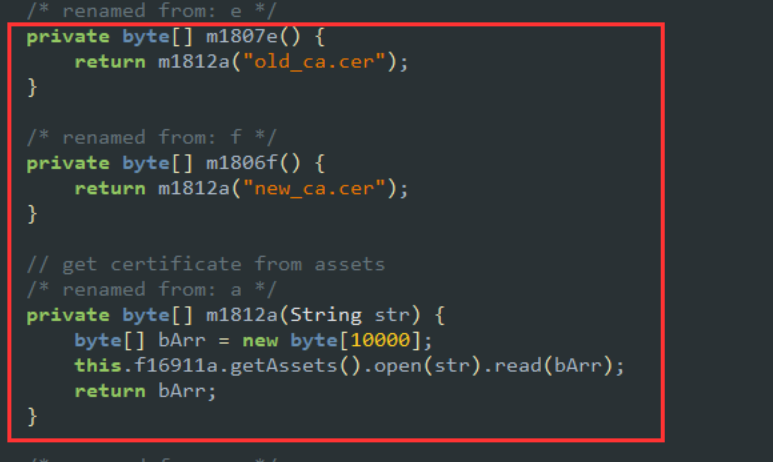

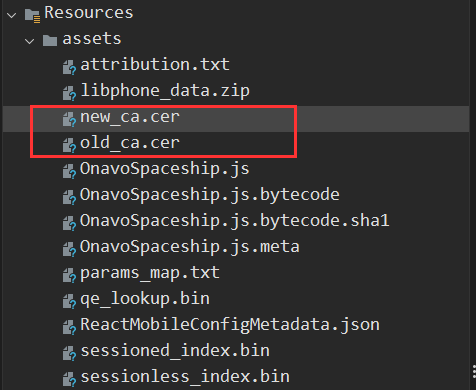

So we need to go back to much older releases before 2019, specifically a version from September 2017. The certificates in this version are found as assets named "old_ca.cer" and "new_ca.cer". The relevant code is found in the class ResearchCertificateManager.

The can be found under the "assets" folder (if uncompressing the .apk as a zip file). Observed in JADX:

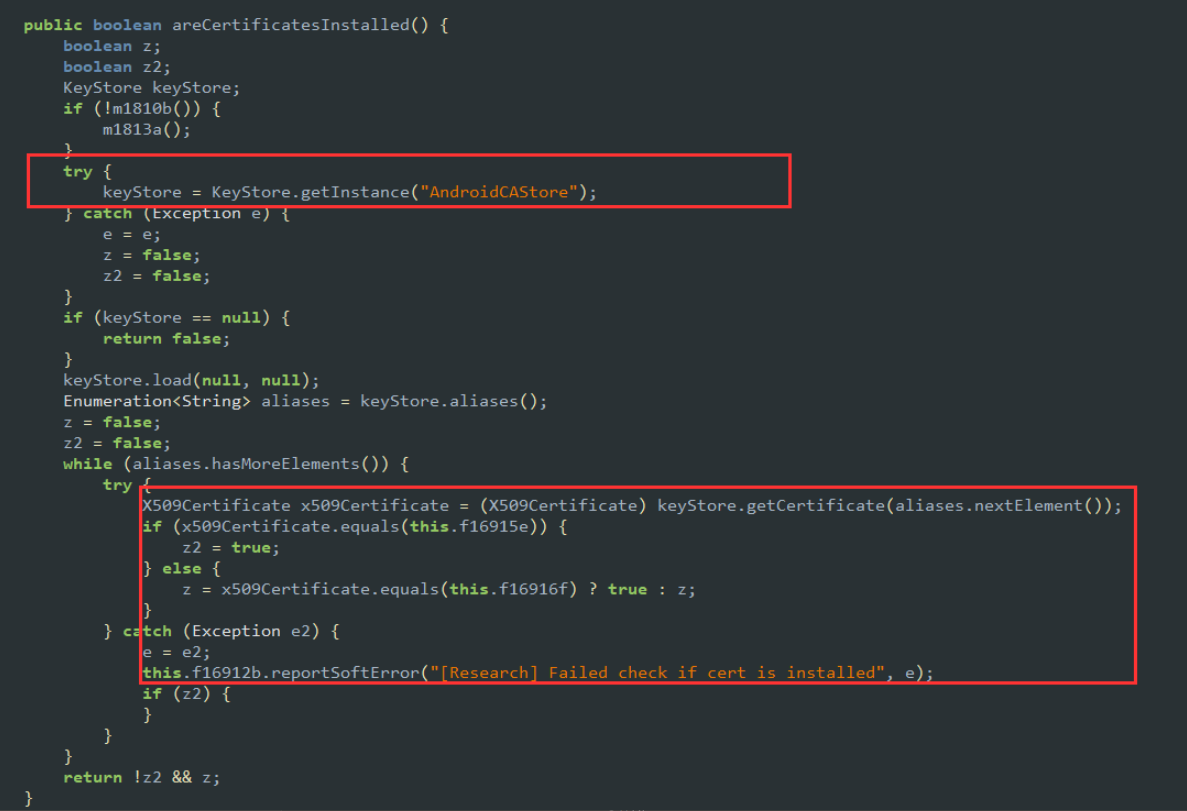

Also observing the routine to check if the certificates have been installed or not:

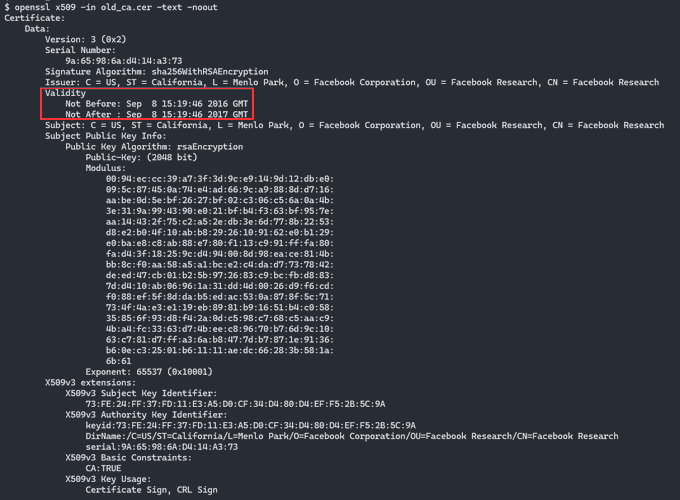

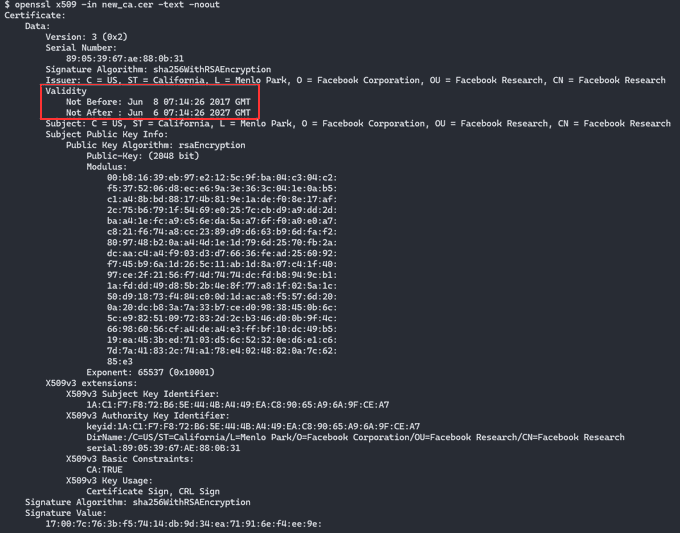

Now why would there be two certificates? (old and new)? Here are the two certificates pulled from one version of the app. Whoever had created the first certificate had only issued it to be valid for one year. If this was an oversight, they did manage to figure it out before the expiry time.

old_va.cer vs new_ca.cer

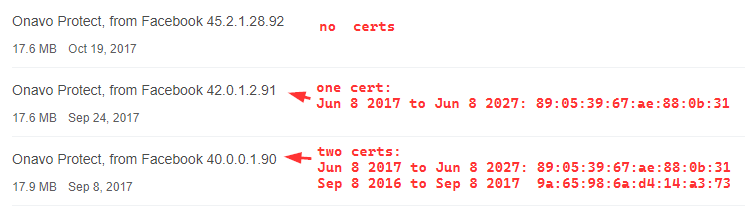

I have not been able to find all versions of the .apk online, but enough to draw the following conclusion:

- The first certificate was valid from Sep 8th 2016, some months before Mark Zuckerberg put the call out to gain further insight into Snapchat (email dated June 9th, 2016)

- The second certificate was added alongside the first which was valid from Jun 8th, 2017. It will be valid until Jun 8 2027.

- At least from Oct 19th, 2027, there are no certs, the second cert was deleted from the app completely. As stated earlier, court documents explain certificates were obtained from the server. I have yet to locate the functionality relevant to this in the apps I have obtained from archives, and more work needs to be done here.

Versions with certificates found with their respective fingerprints:

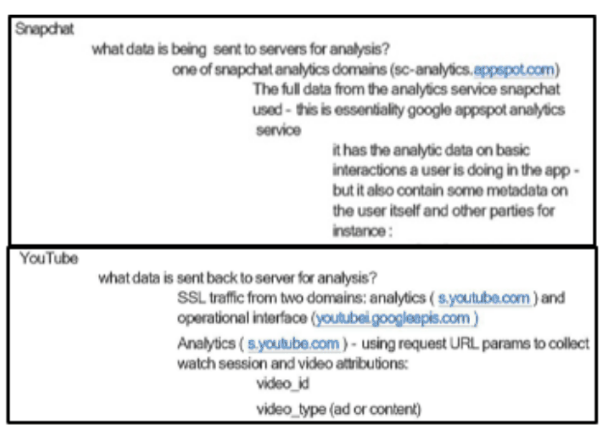

The court documents state that there was additional interception of YouTube and Amazon at later dates. Here we would have to dig further to find out in which apps and how this was done:

Back to the pinning question

Any app doing full certificate pinning would have prevented this technique from working. Around the time period in question, Snapchat was doing some certificate pinning. But not everywhere.

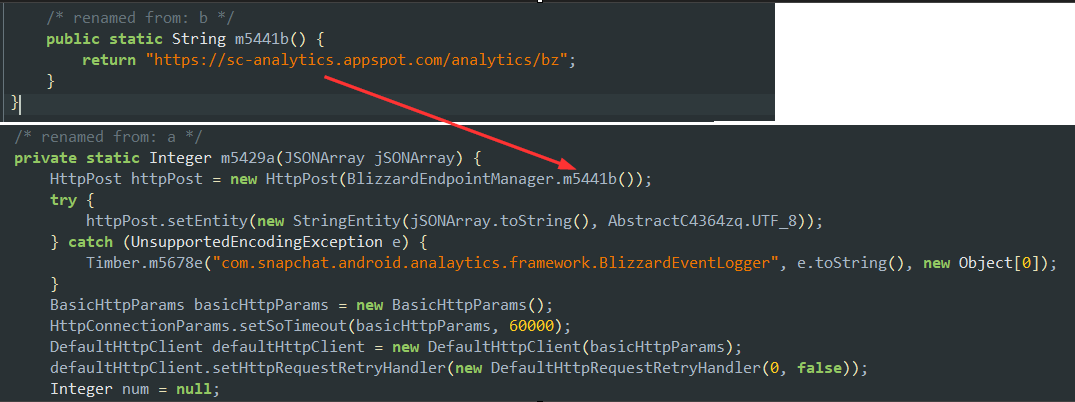

We can go back and grab an old Snapchat app and check for ourselves. What was the domain? According to one the artefacts in the document discovery, it was sc-analytics.appspot.com:

And behold, in a decompilation of and old Snapchat app, traffic to this domain did not use certificate pinning:

As discussed earlier, Facebook were aware of the security enhancements in Android and the wider adoption of pinning, with the statement included (reference earlier):

There is a general question on SSL bump long term applicability on Android as SSL pinning by default is present on newer devices.

What else?

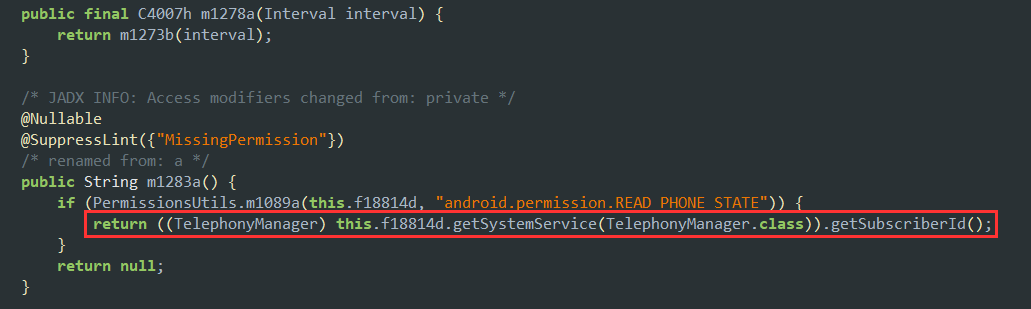

This one caught my eye, a request to obtain the subscriber IMSI. A very sensitive bit of data indeed:

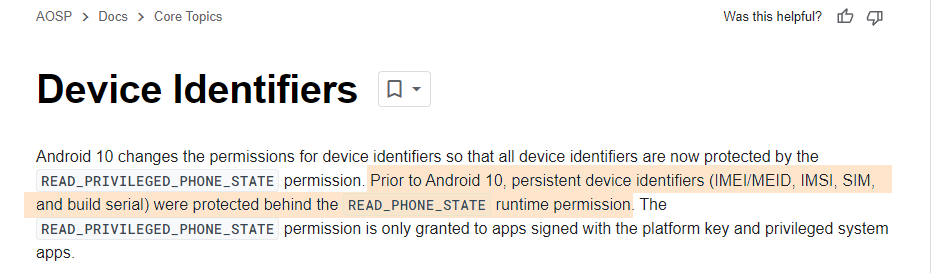

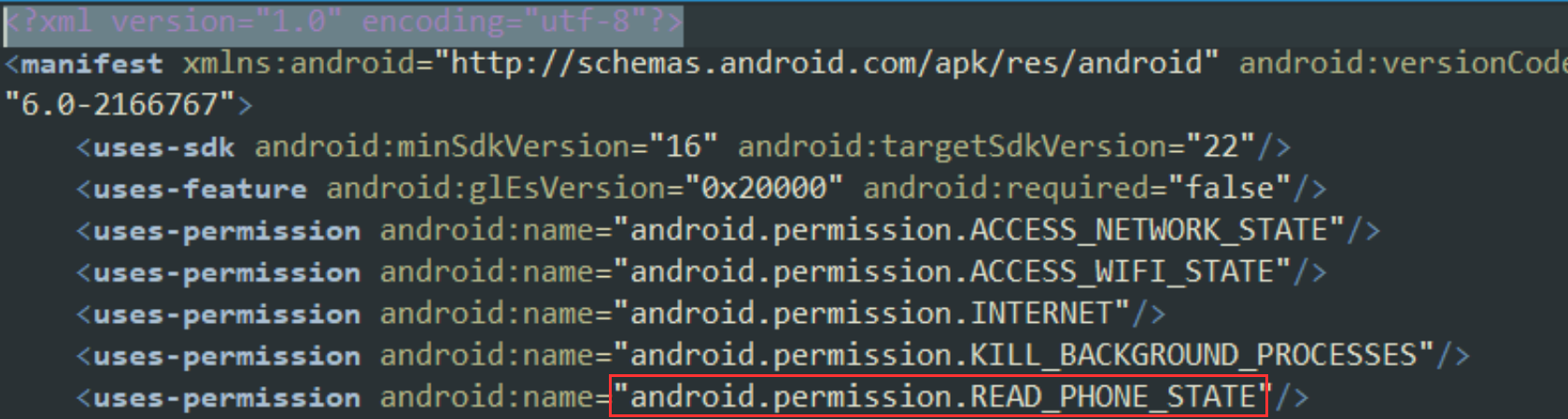

Initially I was wondering how this is even possible, and it seems at the time, it was actually possible with the permission READ_PHONE_STATE:

Which of course was defined in the app's manifest:

Given this discovery, there is probably more to explore.

Wrapping up

While this is all "old news" in the sense that happened years ago, it is interesting from a technical standpoint to see how far application developers, and even companies like Facebook will go to abuse permission models on mobile phones.

And there is certainly is more to dig into, such as the routine to trigger the CA install procedure, how certs were added after 2017 and what else the Onavo application was collecting. Also, it would also be nice to find iPhone version of the application if anyone knows where to find copies.

If the class action lawsuit progresses in an interesting way, perhaps this could provide further motivation to continue the exploration.

If you are interested in receiving further updates, feel free to subscribe below with an email address, and/or follow me on X.